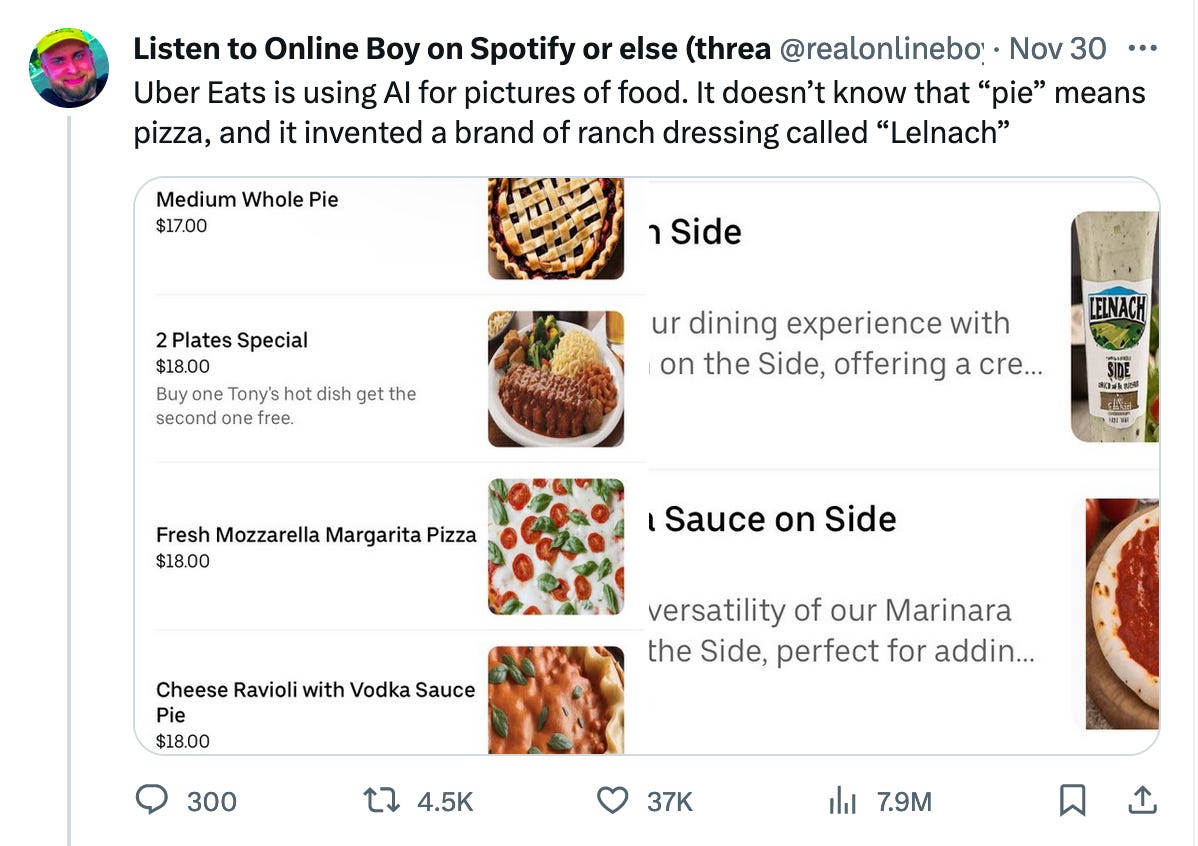

This popped up on my Twitter feed this morning:

Before I say anything else, I should be clear that I’m not anti-tech. I have as much of the stuff as anyone else, and I used to work in IT. It has its time and place.

That said, I am concerned, and have been for a while, that tech is progressing too rapidly for the human brain to handle it well (social media, I’m looking at you). Do we know what we want tech for? Do we know what we genuinely need it to do? Or are we creating things just for the sake of creating them?

Anyone who’s ever read Frankenstein knows what can happen if we’re choosing Option C. Again, I don’t intend to be alarmist—and I don’t think I am. But there are unintended consequences to almost anything in life, but nothing so much as wanton overuse of nascent AI.

A lot of responses to this tweet insisted that these images had to have been deliberately chosen by the restaurant (because, obviously, if you’re running a pizza joint, you really want a picture of a cherry pie where your pizza should be), or that it was a “ghost kitchen” and therefore not a real listing. But it is a real brick-and-mortar shop, and the guy who posted these screenshots called to verify that they had no idea where the images on their page had come from, and were working to fix it.

The fact that the images appeared without warning or their knowledge raises the question of corporate overreach as well as bad AI, of course.

The thing I find fascinating here is that it’s clear that AI is, first and foremost, not actually intelligent. Calling it AI implies that there’s some actual thought going on, and certainly anyone who’s seen what ChatGPT is capable of putting out has reason to anthropomorphize that way, even if there’s a world of difference between a large language model and Star Trek’s Lt. Commander Data.

AI is a misnomer, but how humans use language does influence how we think of things, and I think we’re going to see more and more of this sort of confusion in the near future. Is there really any question that someone at Uber (I want to say a clueless intern, but let’s be real—the CEO is just as likely) decided that AI could do the job faster and better than a human could, and flipped that switch?

There I go, sounding all alarmist again. I’m not sure it’s alarmism, though, when this sort of thing starts to creep in even though the tech isn’t up for the job—and actual humans are forced out of the equation. It’s pretty obviously the result of someone’s desire for a “quick fix.” It fixed nothing, but by golly, it got some photos on that restaurant’s page!

I recently spoke to the head of a college career center, who told me that she advises writers to know the ins and outs of using ChatGPT these days, because companies are starting to use it for their lower-level writing needs, which means anyone applying for a writing job has to know how to it works and be able to use it.

You can guess how I reacted to this news—and it only got worse when she told me that they’re already starting to see entry-level writing jobs disappear as a result of the new tech. Every reason I could think of for human writers producing a superior product—human writers have a style and a voice all their own, for instance—had an answer (you can tell ChatGPT not only to write you a five-paragraph essay about penguins, but also to do it in the style of P.G. Wodehouse…and it will).

In case you were wondering, she also confirmed that the thinking is—I really wish I were surprised—that companies don’t have to pay ChatGPT, so why pay a human being to write for them instead? And the technology is improving exponentially. A year from now, we may look longingly on late 2023.

As a longtime science fiction reader/viewer, this all sounds so incredibly dystopian to me that I can’t believe it’s actually happening. At the same time, I’ve been around long enough to be pretty familiar with the way capitalism thinks, so… yeah. It was inevitable.

About ten years ago, I went to a conference for work where a presenter talked about the jobs that AI was going to take over. It wasn’t just writing—oh, no. Some of the things on his list were shocking: Doctor. Lawyer. Actor.

I don’t know about you, but I’m not interested in an AI doctor or lawyer, and I’m also not interested in watching the equivalent of Siri on the big screen.

None of this is too far off, either. Just last week, we got to hear the first AI “singer/songwriter,” a creation called Anna Indiana.

Is she great? No. Is she good? Not even. But this, too, will continue to improve, so I don’t take much comfort from MusicRadar’s headline about dear Anna: AI pop singer Anna Indiana's fully AI-generated debut single suggests AI poses less of a threat than we thought: “I’m sure my microwave will love listening to this”

We already live in an era where actual human musicians barely earn any money anymore.

I don’t want to imagine a world where we only listen to AI-generated music, or read AI-generated novels, or look at AI-generated art, because, when I try, what comes to mind is being trapped in a small room painted Builder’s Beige for the rest of my life. What on earth is the point?

Maybe those of us who are wary about where AI is going will turn out to be wrong. I hope so. I’d love to think that there will still be a demand for human-generated creativity; I just hope it doesn’t end up being a niche product.

I’m going to leave you with this video of Stephen Fry reading a letter from Nick Cave to two fans who asked him about creativity and ChatGPT. I can’t possibly say it any better than he does:

What do you think? Am I too worried about this? Not concerned enough? Got another perspective? Please share it in a comment!

You know how I feel about this. I don't use ChatGPT because I don't want to help it get smarter, though AI has been fed my cookbook without my consent. I know it's getting smarter without me, but I still refuse to buy into it. If writing turns into a job where you just plug directions into a bot and ask it to write a story, that's not a job I want. Just because it makes something easier, doesn't make it better. I'm not anti-tech either. I can appreciate that technology is helpful in life, but I'm also aware of the harm it does as well. I liken the rise of AI to global warming. I know I can't singlehandedly stop greenhouse gas emissions, but I do my best to limit my damage to the planet. I don't eat meat, we only have one car for our household of two, I recycle, compost, and have no kids. I've tried to make my carbon footprint as small as possible. I just think if writers fully embrace AI and ChatGPT, they can't complain when the robots take their jobs.

Yes, we should be worried. Not only about the outcome of the heavy use of AI, but also for its provenance; stealing the words of actual writers and artist to feed its artificiality.